In the typical machine learning threat model, there is some person or company who using machine learning to accomplish a task, and there is some other person or company (the adversary) who wants to disrupt that task. Maybe the task is authentication, maybe the method is identity recognition based on visual data (e.g. a camera feed), and the adversary wants to be able to assume someone else's identity by wearing a particular pair of sunglasses.

That's just one example, but it is representative of a lot of the current investigations into model risks and safety.

But what if there is no adversary? What if you have model failures because life is just hard sometimes? Here's an example of what I mean:

You may have seen this graphic before -- it's a collage of close-up photographs of blueberry muffins and chihuahua's faces, and it's really hard for humans to tell one from the other. There's no real reason why this task should be hard. You just happen to have a visual system that pays a lot of attention to shape and color, and less to things like texture, where the difference between muffins and dogs is obvious.

When an adversary crafts attacks on vision systems by modifying an image, they often resort to things humans don't really notice or care about, like subtle changes in position1 or texture2. Humans find these methods surprising, because computers don't "see" objects the way that they do.

We have a few hundred years now of cataloguing errors in the human visual system -- we call these optical illusions, and if you every study human perception these will be a large fraction of your course work -- because these failures give you clues into how the perceptual system actually works.

We could imagine a similar exercise for computer vision -- looking for computer vision illusions to understand what these black boxes rely on to make decisions. If you yourself are an adversary, this will give you good heuristics to cheaply compute attacks on vision models.

In Natural adversarial examples3, Hendrycks et al. do this for image classification algorithms. This task involves taking a centered, cropped, cleaned image of one thing, and assigning a categorical label to it, like dog or cat, where the number of labels is fixed and given in advance.

Computers are very good at this task on the current set of benchmark datasets -- close to the theoretical best possible score, and frequently better than humans. This does not imply, however, that they never fail. Sometimes they fail in very strange ways.

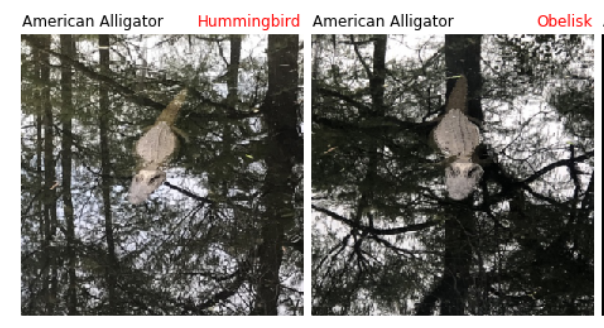

Here is an example of two photographs of the same alligator, taken about one second apart, and given to an image classification algorithm (resnet-50, in this case). The model doesn't only fail to recognize the image as an alligator -- it misclassifies it in two wildly different directions! First saying it's a bird, then an obelisk (this is the word for a tall stone structure, like the Washington monument).

This instability is one of the failure modes that Hendrycks et al. discuss in the paper, and it's one we've already seen matters a lot for generating adversarial attacks. The changes don't need to be large, because the classification model has a brittle representation of what makes an alligator an alligator.

Is this interesting?

Here are a few more useful ones, if you are thinking about making your own hard-to-classify model inputs (for good or evil):

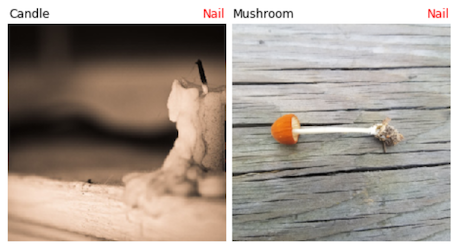

1. vision models care a lot about backgrounds

The prototypical example you hear about this one is that computer vision models think anything in the ocean is a boat. They pay attention to the background of the image -- the water -- since this is a strong signal of what the photograph is likely to contain. Statistically speaking this is a strong signal to rely on, but only given that particular dataset. As a human, you are aware that a boat is still a boat when on land, but because most photographs of boats are while at sea (and not while on a trailer attached to the back of a pickup), the model doesn't learn that the water is only a correlated signal and not a sufficient one.

Here are examples from the paper: first, anything is a nail if it is on top of a piece of wood,

and second, anything is a hummingbird if it is front of a hummingbird feeder.

The implications here are pretty obvious for an adversary. If you want something to appear innocuous, put it in front of a two by four.

2. vision models care a lot about texture and unusual colors

Here's an example, shared with me by someone who used to work at Yelp: people take a lot of photographs of themselves at restaurants, but if your business is about selling people on restaurants, you really just want the photograph to show the food (and not the people next to it). So you do a reasonable thing, which is training a model to crop down images to just the food bearing pixels from the original image. It works pretty well most of the time, except that it always leaves in fingers holding the plates of food.

Why?

Fingers are the approximate color and texture of hot dogs. Which are a food.

Here are three examples like this from the paper. First, anything yellow is a banana, no matter how it is shaped:

Second, smooth gray things are sea lions

Third, anything with vertical slats is a rocking chair

This one is a little trickier to put in practice in real life, because textures of things aren't easy to change unless you can modify the digital representation of something before it gets to a model. But you can easily paint anything yellow.

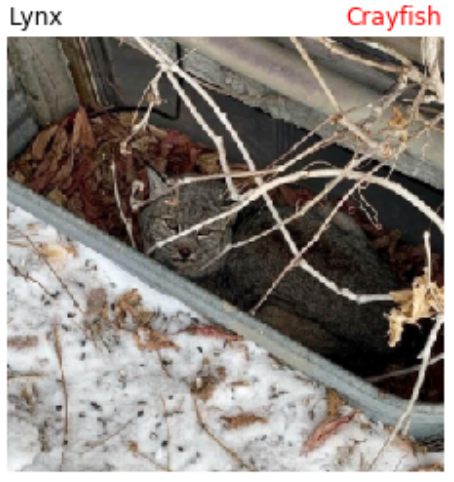

3. vision models get confused by occlusion

This might be the easiest attack mode to deploy in real life. If you don't want to be discovered, put something in front of your face! Now it would be trivial to put an object inside of a box, occluding it, and then claim a machine learning model has failed to classify it correctly.

But the object doesn't need to be completely occluded -- or even hidden at all -- as long as there is something prominent closer to the camera.

Here's an example from the paper. The cat in this photograph is clearly visible. But because there are branches in the foreground, the image recognition model skips over the cat behind the bare, leafless tree branches, and instead fixates on some leaves in the snow that I guess do sort of look like crawfish.

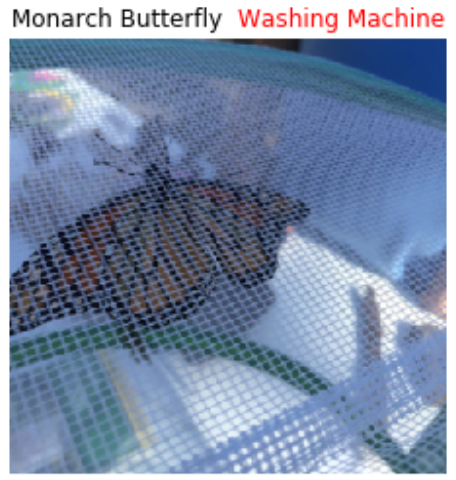

Here's another example I really like -- put a mesh screen in front of anything and it becomes a washing machine.

In conclusion, with enough yellow paint, you can make anything bananas, B-A-N-A-N-A-S.4 (To purchase this banana mug, please visit the adversarial designs banana coffee mug page)

-

Y. Zhang, W. Ruan, F. Wang, and X. Huang, “Generalizing Universal Adversarial Attacks Beyond Additive Perturbations,” arXiv:2010.07788 [cs], Oct. 2020 [Online]. Available: http://arxiv.org/abs/2010.07788

-

D. Tsipras, S. Santurkar, L. Engstrom, A. Turner, and A. Madry, “Robustness May Be at Odds with Accuracy,” arXiv:1805.12152 [cs, stat], Sep. 2019 [Online]. Available: http://arxiv.org/abs/1805.12152

-

D. Hendrycks, K. Zhao, S. Basart, J. Steinhardt, and D. Song, “Natural Adversarial Examples,” arXiv:1907.07174 [cs, stat], Jul. 2019 [Online]. Available: http://arxiv.org/abs/1907.07174

-

apologies to Gwen Stefani