Maybe. It depends a little bit on how you do it and a lot of bit on why.

I am not a lawyer, and this blog post should not be construed as legal advice. But other people who are lawyers or judges have written about this, so we can review what they have said here.

The relevant federal statute about this is the Computer Fraud and Abuse Act (CFAA)1, which contains the following statements:

Whoever

(2) intentionally accesses a computer without authorization or exceeds authorized access, and thereby obtains information from any protected computer

(5) knowingly causes the transmission of a program, information, code, or command, and as a result of such conduct, intentionally causes damage without authorization, to a protected computer

... shall be punished as provided in subsection (c) of this section.

Nothing from this section has been used to prosecute an attack on a machine learning model (yet), but we do have some case history from regular old hacking that we can draw some inferences from. To wit:

1. it is probably illegal if you were not given explicit access to the model

Section 2 is concerned with two things: preventing hackers from accessing computers they shouldn't, and preventing company employees from accessing data that they shouldn't. The first case is the classic example of someone cracking a password to gain access to another company's network, then exfiltrating data.

The second case involves someone who has physical access to the computers (e.g. because they work in the office) and maybe login access to some computers, but not other computers, or not the database, or not the admin account. Think about this as the disgruntled employee case.

A couple of non-obvious things to note about this:

- Kumar et al.2 clarify in their 2020 paper that "any protected computer" has been read by the courts to mean "any computer"; and,

- the recent Supreme Court case van Buren vs the United States3 decided violating the terms of service of a website does not constitute "exceeding authorized access"4.

So any model attack that relies on unauthorized access is likely to be prosecutable under section 2. This would include machine learning supply chain attacks which involve uploading tainted models or tainted source code with the hope of infecting another's computer.

2. it is probably illegal if it causes damage to whoever owns the model

This section is more focused on outcomes as opposed to methodology. The key phrases here are "knowingly transmits" and "intentionally causes damage". If you were to accidentally transmit some information that caused someone else's model to malfunction, that may not constitute a violation of the CFAA. Likewise, if you accidentally caused damage, that might not be a problem. In other words, the intent here is really important.

Data poisoning attacks -- where an attacker creates malicious data that affects models trained on those data -- are likely to meet the criteria of knowingly transmits and intentionally causes damage.

Evasion attacks, where an attacker creates malicious data that causes a model to misbehave while in use, do not damage the model per se -- it still works just fine for normal inputs -- and so are probably not illegal.

But what happens if a model ends up training on your evasion attack data? We saw in adversarial attacks are poisonous that evasion attacks on a model could turn into availability attacks if used for training data. The intent or lack of intent to cause damage is likely to be important here, and possibly also the "knowingly transmits" part. If you are wearing an adversarial t-shirt and someone else takes your photograph and uses it for training data, that might be less problematic than an attacker uploading hundreds of evasion attack examples to wikimedia.

A related concern here is models that get online updates based on user feedback. Knowingly providing malicious feedback that disrupts the capacity of a model to function may constitute a violation of section 5.

3. just because it's not illegal doesn't mean it's zero risk

There are a number of model attacks that do not appear to be covered by the CFAA. For example, given enough queries, it's possible to recover information about the training data set, which could be problematic if the model was trained on sensitive data (financial data, health data, etc.). This kind of data exfiltration is probably not illegal, but is almost certainly in violation of whatever terms of service you have agreed to.

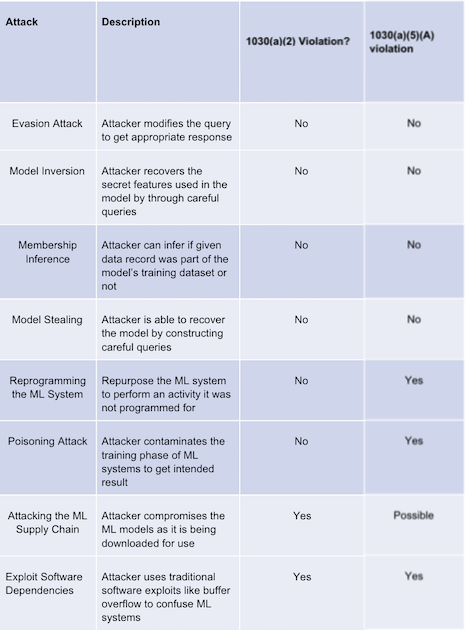

Here's a helpful summary table of the legality of different kinds of machine learning model attacks, taken from Kumar et al., and modified slightly in light of the recent Supreme Court decision.

Is this interesting?

-

https://www.law.cornell.edu/uscode/text/18/1030

-

R. S. S. Kumar, J. Penney, B. Schneier, and K. Albert, “Legal Risks of Adversarial Machine Learning Research,” p. 14.

-

https://www.supremecourt.gov/opinions/20pdf/19-783_k53l.pdf

-

Thomas dissented, because of course he did